Benchmarking company Vals AI today (16 October) released a highly-anticipated report that evaluates and compare how AI products perform on legal research tasks and compares legal AI tools to ChatGPT – however all the market leaders in the space are missing.

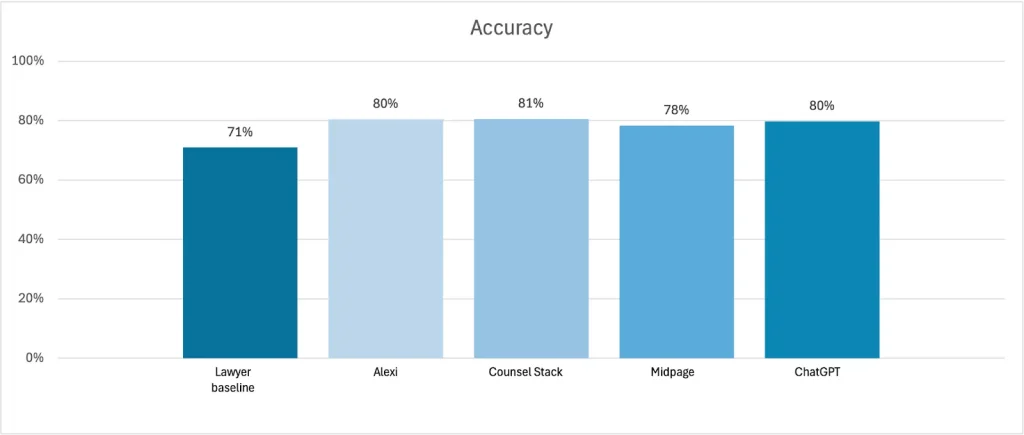

The study assesses the performance of Alexi, Counsel Stack, Midpage and ChatGPT and their ability to respond to 200 US legal research questions.

This follows a Vals report in February this year that tested legal AI tools’ performance in tasks such as document extraction and summarisation, but carved out legal research to be reported on at a later date. The February report included CoCounsel from Thomson Reuters; Vincent AI from vLex; Lexis+AI from LexisNexis (which opted out of the February report); Harvey and Oliver from Vecflow.

Vals says that although they had vendors opt into legal research as part of the first report, after it was separated out, they chose not to be included. We have reached out to LexisNexis and Thomson Reuters for clarification.

Legal research has only recently become relevant to Harvey, following its integration with LexisNexis in June.

The research for this report was conducted in the first three weeks of July. There will be arguments from vendors that given how fast technology is moving, this is a significant lapse of time.

For the legal research report out today, Vals created a scoring rubric that takes into account prior studies and industry feedback, evaluating the responses for:

- Accuracy (is the response substantially correct);

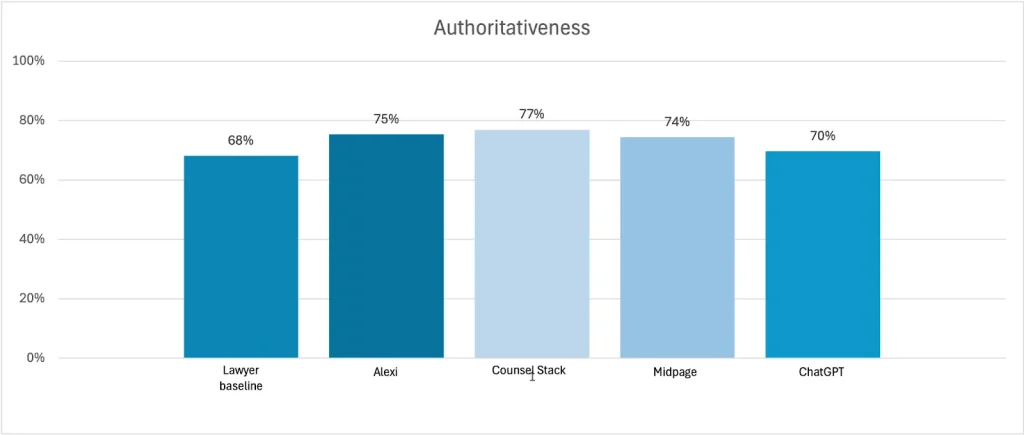

- Authoritativeness (is the response supported by citations); and

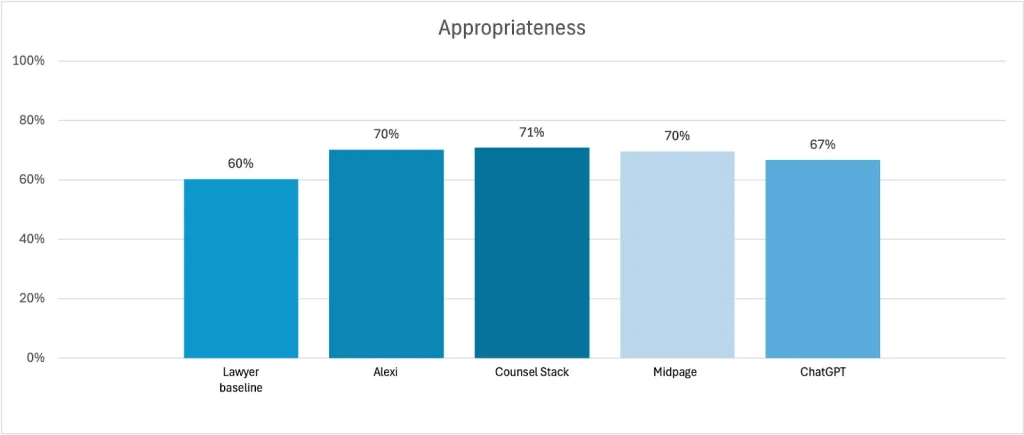

- Appropriateness (is the response easy to understand).

They compared the AI products’ performance against a human lawyer baseline.

Contributing law firms included Reed Smith, Fisher Philips, McDermott Will & Emery, Ogletree Deakins, Paul Hastings and Paul Weiss, which helped to create the dataset used for the study and provided additional guidance and input to ensure the study best reflected real-world legal research questions and answers.

Counsel Stack received the highest score across all criteria by a small margin. Interestingly, ChatGPT performed almost as well as the legal AI tools and outperformed the human baseline across all three of those criteria. All of the tools outperformed the human baseline.

In a highly interesting finding, the report concludes that both legal AI and generalist AI can produce highly accurate answers to legal research questions. On average, the AI products score within 4 points of each other. The legal AI products performed better than ChatGPT – but only just.

We’re updating this post with further details. You can download the report here: https://www.vals.ai/industry-reports/vlair-10-14-25

It provides within the appendix the data set Q&A that was used.